Open API Spec - An API-First Approach

Enterprises today use an API first approach towards application development and sharing data. This API based approach is used under several scenarios like - breaking up a monolith into micro-services, adopting cloud and adopting kubernetes.

APIs are also a popular choice to adopt Service Oriented Architectural approach. API also form a key tenant to support data sharing and logic reuse. An API in an application can be compared to a function in the imperative programming paradigm.

Open API spec is a key mechanism for stake holders to creating and delivering API. Often times the spec forms the blue-print for defining the application, how you can access it, and who can access it. An Open API spec is a mechanism of sharing information across different roles identified in an SOA architecture - service provider, service broker and a service consumer. Each of the roles can benefit from a clear specification of API.

Delivering And Consuming Your API

The spec created in form of Open API Spec can also be used to automatically generate code for client and server. The spec itself is language agnostic and generators facilitate creating client/server in an implementation language of choice.

But delivering and consuming an API also often involves an intermediate component - the API Gateway. Often times, API features like rate-limiting, authentication and authorization, telemetry of API usage are provided by the API gateway. However the API gateway has to be programmed for the APIs being served. This is often a time consuming process and can also be error prone.

What if declarative configuration for your API gateway can be generated in the same way as your client and server from Open API spec.? This declarative configuration can then be used to program the API gateway. Still better, what if the open api spec can be ingested and used to program an API gateway. And taking it a step further, what if the open api spec can be used to program your API gateway running standalone or as kubernetes ingress?

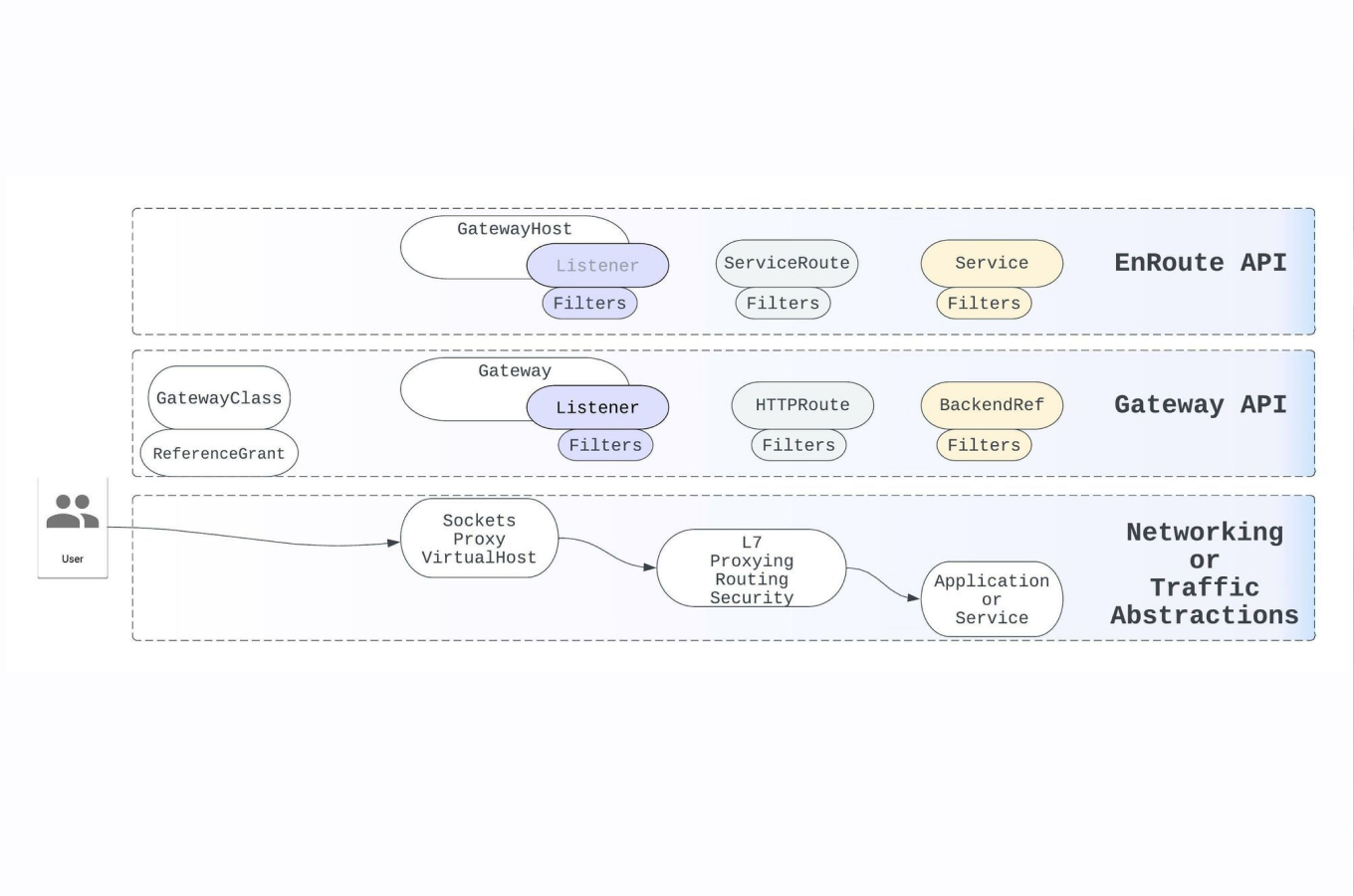

Open API Support In Enroute Universal API Gateway

Network infrastructure is probably one of the hardest piece to automate when driving automation during software development. Programming an API gateway using Open API spec provides this automation capabilities to push automation further in an organization.

When an Open API spec is available, we can automatically program the Enroute API gateway. This kind of automation substantially increases productivity and gets rid of the error prone manual process of configuring the API gateway. It also provides a mechanism to keep the API gateway in sync with the spec.

Enroutectl : A CLI Tool To Program Enroute Universal API Gateway

We quickly show how enroutectl can be used to program the Standalone API Gateway. Similar approach can also be taken with the Enroute Kubernetes Ingress API Gateway.

Here is high level usage for the command -

Run the the API Gateway locally -

To program locally running Enroute Standalone Gateway instance using the Petstore openapi spec -

Dump configuration of locally running Enroute API Gateway -

Access the Petstore application through the API Gateway -

0 2 107446

For a more detailed description of enroutectl, refer to the article on - How to use Open Api Spec to program Enroute Standalone API Gateway

Telemetry For Your APIs

Enroute Standalone API Gateway provides telemetry to understand how it is performing. Envoy proxy on top of which Enroute is built, provides rich telemetry.

Enroute comes pre-packed with the open source Grafana tool that provides programmability to the telemetry interface.

For the petstore example we discussed above, here is a grafana screen that ships in Enroute.

Note that this is one instance of how you can visualize what your API Gateway is doing. On the top left is the latency histogram of requests. Below it shows the state of Envoy Clusters for swagger upstream. Below that is the operational state of the API Gateway.

On the right is request stats with request rate, request response and number of connections to upstream.

The programmability on Grafana allows tweaking this screen for your needs Here is another example - (eg: Enovy Grafana Dashboard)

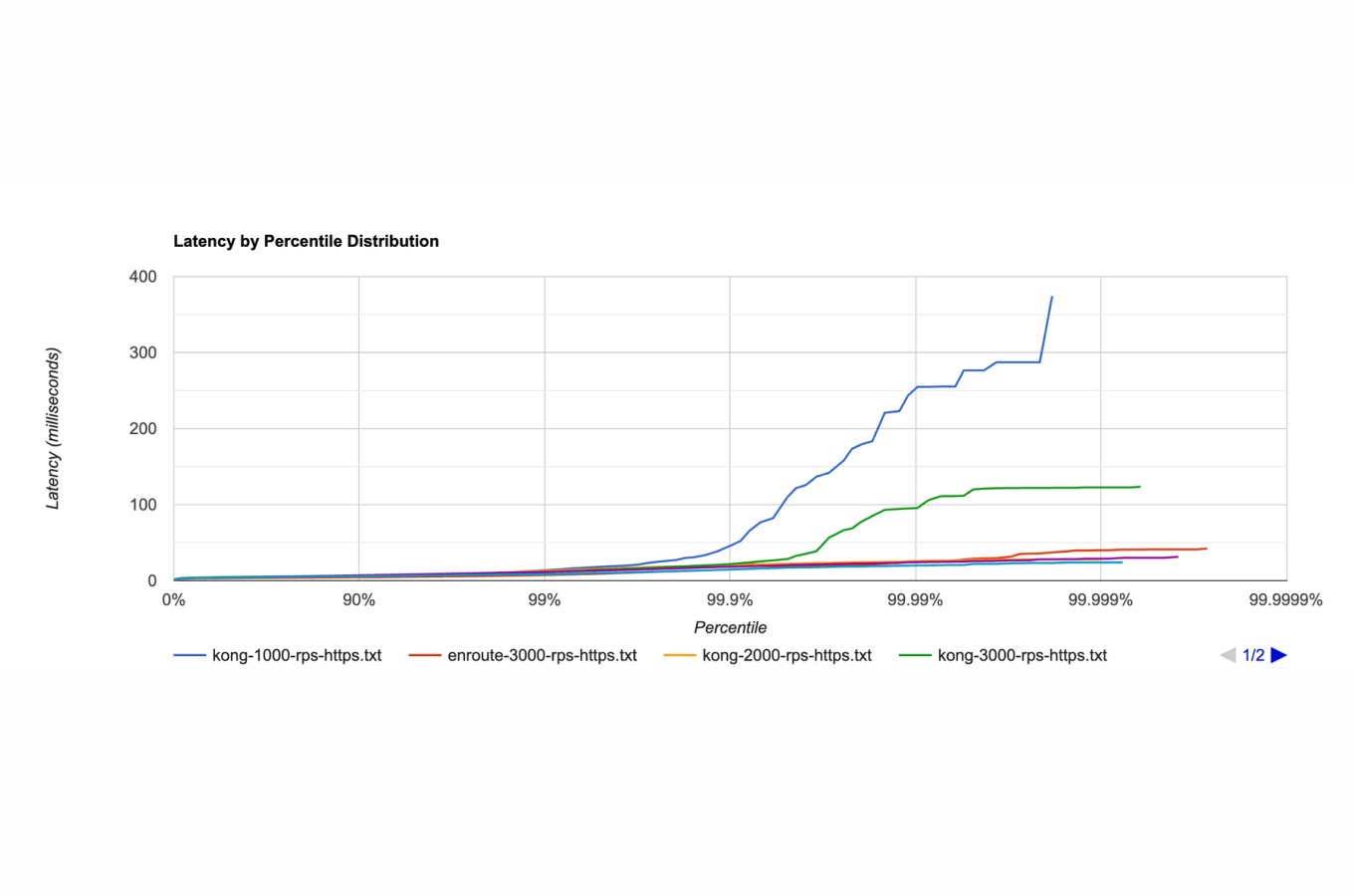

Performance

Envoy is a high performance proxy written in C++ and provides raw performance necessary to run applications that need high throughput and low latency. The data path of Enroute is built from Envoy proxy, which makes it easy to deploy/configure Enroute for API gateway use-cases.

Secure Your APIs Using Advanced Rate-Limiting

Enroute comes with advanced rate-limiting included along with the Gateway. Protecting the API resource is critical both for internal and external use. Enroute can also enforce different rate limits depending weather the user is an authenticated or non-authenticated user. More information about advanced rate limiting on Enroute Universal Gateway can be found in the article - Why Every API Needs a Clock

Run Anywhere

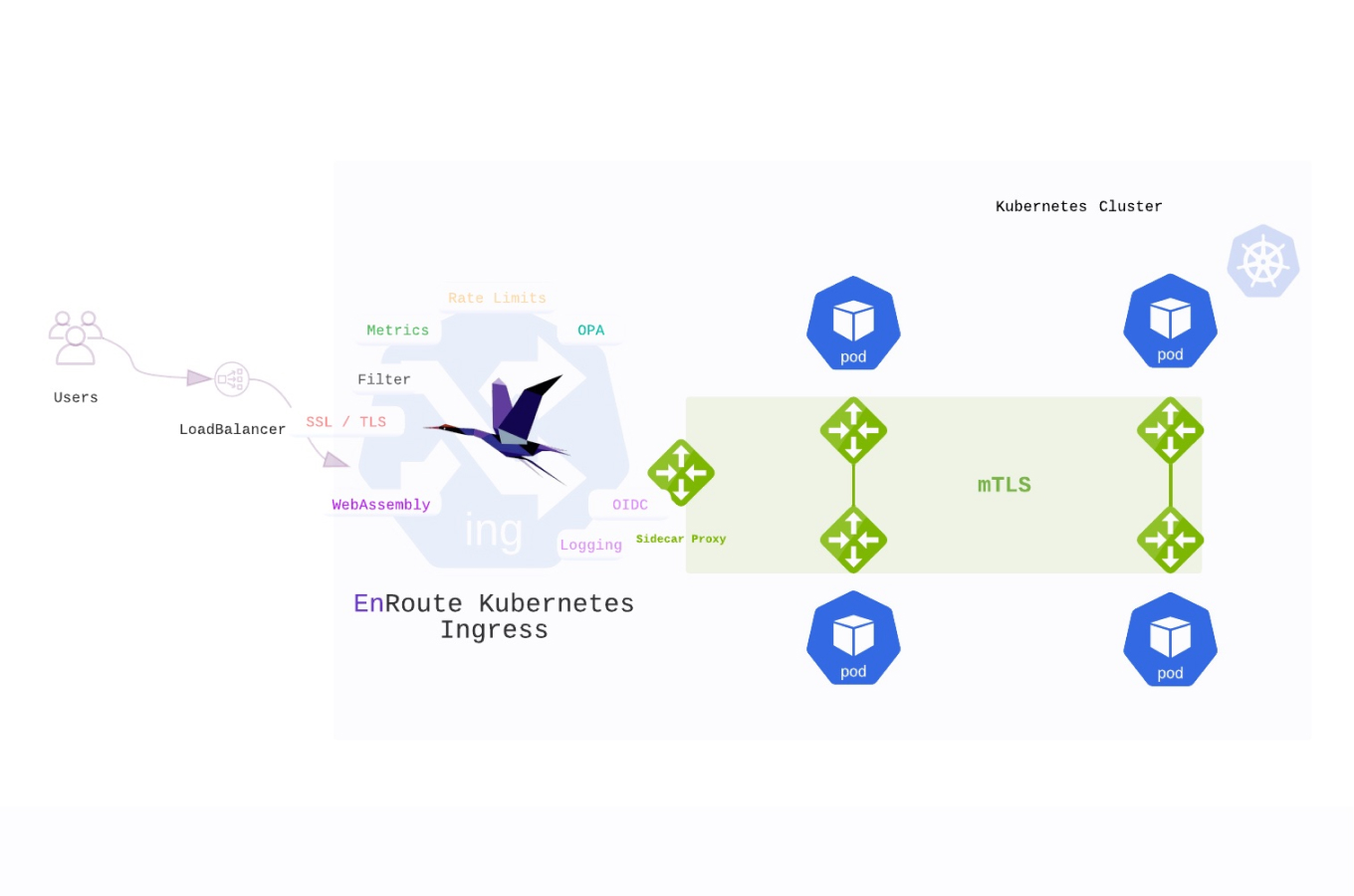

Enroute’s unique architecture allows it to run both as a Standalone Gateway and a Kubernetes Ingress Gateway. All the capabilities that can be configured on the Standalone Gateway can also be run on the Kubernetes Ingress.

This is critical as applications are deployed across platforms like kubernetes, in the cloud or on an on-premise data center.

Conclusion

Automating your network infrastructure helps with increasing the velocity of the development cycle thus saving valuable time spent in configuring and managing network infrastructure like an API Gateway.

The ability to use declarative config for your API gateway, make changes to it, making your API Gateway config a part of version control and CI/CD processes, allow you to easily manage your infrastructure like code.

Open API spec is the blue print for building APIs and they API gateway should be able to work in tandem with this spec allowing operations to automate.

Enroute Universal API Gateway provides this functionality both for standalone API gateway and Kubernetes Ingress Gateway.