Introduction

In the modern age of APIs and interconnected web applications, rate limiting is one of the most essential approaches for maintaining system stability and security. When you're managing a high-traffic web service you need to prevent DDoS attacks, brute force attacks or ensure fair use of resources, rate limiting ensures the stability of your system.

We are going to break down the concept of rate limiting in a comprehensible way. We will explain why it is essential and discuss different rate limiting algorithms. Share how rate limits can be used effectively in your system design.

What is Rate Limiting?

Rate limiting is a method of controlling access to a system in a given time period for a user or device. We can restrict API users from making more than 100 requests per minute. If the user exceeds the set limit, they will receive an error message with a status code of "429 Too Many Requests”.

Why Do We Need Rate Limiting

Rate limiting is essential for several reasons:

- Preventing Abuse: If there are no rate limiters, bad people could flood your system with a high volume of network traffic. They will consume resources and potentially cause resource starvation.

- Preventing DDoS Attacks: Distributed Denial of Service (DDoS) attacks are one of the most common types of cyberattacks in which attackers flood a system with the high volume of traffic from multiple sources to take it offline. Rate limiter limits the number of requests allowed by each IP address or user which prevents these types of service attacks.

- Ensuring Fair Use: It's important to make sure no single user consumes an unfair amount of resources in multi-tenant systems where multiple users or clients share resources. Rate limiting enforces API rate limits so each user is able to use a fair share of the system's resources.

- Preserving System Resources: Some Servers have limited capacity in terms of CPU, memory, and bandwidth to serve users. If too many users request simultaneously, the system can slow down or crash. By using rate limiters, you can preserve these resources for legitimate users.

- Cost Management: There are some services that charge per API call, rate limiting helps you manage operational costs by capping the number of requests users can make. It will prevent runaway costs from excessive traffic.

Types of Rate Limiting Algorithm

You can apply different types of rate limiting algorithms to control system resource consumption. Each type has an individual functionality to control requests. The following are common rate limiting algorithms we are going to discuss.

1. Fixed Window Counter

Fixed window counter rate limiting algorithm is one of the simplest algorithms for controlling rate limits. It divides the timeline into multiple fixed time spans which is known as a window and counts requests within each time period (e.g., 100 requests per minute). If a user hits the limit before the time window resets, subsequent requests are blocked until the window resets.

- Pros:

- Easy to implement and maintain.

- Easy to manage traffic flow

- Cons:

- It is suitable for static traffic management

- Can be challenging to manage requests at the edges of the time windows.

2. Leaky Bucket Algorithm

The leaky bucket algorithm uses a fixed size bucket that slowly empties over time. Requests are added to the bucket until it is full. When it becomes full requests are rejected. By this process, requests are being controlled and it ensures fair distribution of resources among every user.

- Pros:

- Smooths traffic output rate.

- Receiving requests can be stored for processing.

- Cons:

- Picking up the right bucket size is challenging.

- The bucket refilling rate can be a bit tricky.

3. Sliding Window Rate Limiting

The sliding window algorithm receives requests continuously instead of resetting at fixed intervals. It keeps logs of requests and blocks users if they exceed the limit within the sliding window. This approach is a variation of the fixed windows and leaky bucket algorithms.

- Pros:

- Better user experience.

- Smooth request distribution.

- Cons:

- Complex to implement and maintain.

4. Token Bucket Algorithm

The token bucket algorithm generates tokens continuously and assigns them to new requests. If no tokens are available in the bucket requests are blocked as the request has no token with it. Only the request with the token processes for response. This approach helps to control network traffic and the maximum number of incoming requests.

- Pros:

- It is easy to control the overall rate.

- Rate limiting can be flexible

- Cons:

- Slightly more complex to implement.

5. Exponential Backoff

Exponential backoff algorithm is a dynamic rate limit method that works like a loop control system where user must wait longer with each subsequent failed request. For example, if a user fails to connect for the first time to the server it might retry and every time when it retries it must wait a longer time than the previous. The retry time increases exponentially, which protects the system from frequent retry attempts.

- Pros:

- Useful for handling retries.

- Cons:

- Not as precise as other algorithms in controlling the request flow.

Best Practices for Implementing Rate Limiting

To choose the best rate limiter for your system you need to consider good user experience and system protection. Here are some best practices:

1. Define Rate Limits Clearly

Define API rate limits transparently with the users. A good definition should be in the document of how many requests a user can make in a time period. It is also good practice to expose error responses when it drops the request. For example, "You have made 101 requests in the last minutes").

2. Use Graceful Error Handling

Make sure a clear error message with error status code. It helps users understand the actual reason for denying. After getting stuck the user can retry solving the issue. This helps users to handle API calls effectively.

3. Different Limits for Different Users

Sometimes providers offer different packages for users like free-tier, premium etc where free-tier user has fewer requests for access then premium users. It also ensures fair distribution of resources as per user type.

4. Rate Limiting Based on IP Addresses

Limiting requests by IP addresses is a common practice when providing any API service publicly. In this practice a proxy or shared IP environment faces problems. Be careful not to unfairly penalize these users by applying overly strict limits.

5. Rate Limiting by Endpoint

Different types of APIs provide different services, some services need more resources than others. For example, a search API endpoint needs more resources than a simple GET request API endpoint. In this case, different rate limits should be applied to API requests based on the endpoint for fair resource distribution. API rate limiting is effective to have a fine grained rate limit specification for multiple endpoints.

6. Monitoring and Logging

Always log client's activities along with client IP address and other relevant metadata. This monitoring helps to trace out suspicious activity such as Brute force attacks, DDoS attacks and also find out any issue with rate limiting polices.

Common Rate Limiting Implementations in Open-Source Systems

Many open-source tools and platforms already implement rate limiting effectively. Here are some examples:

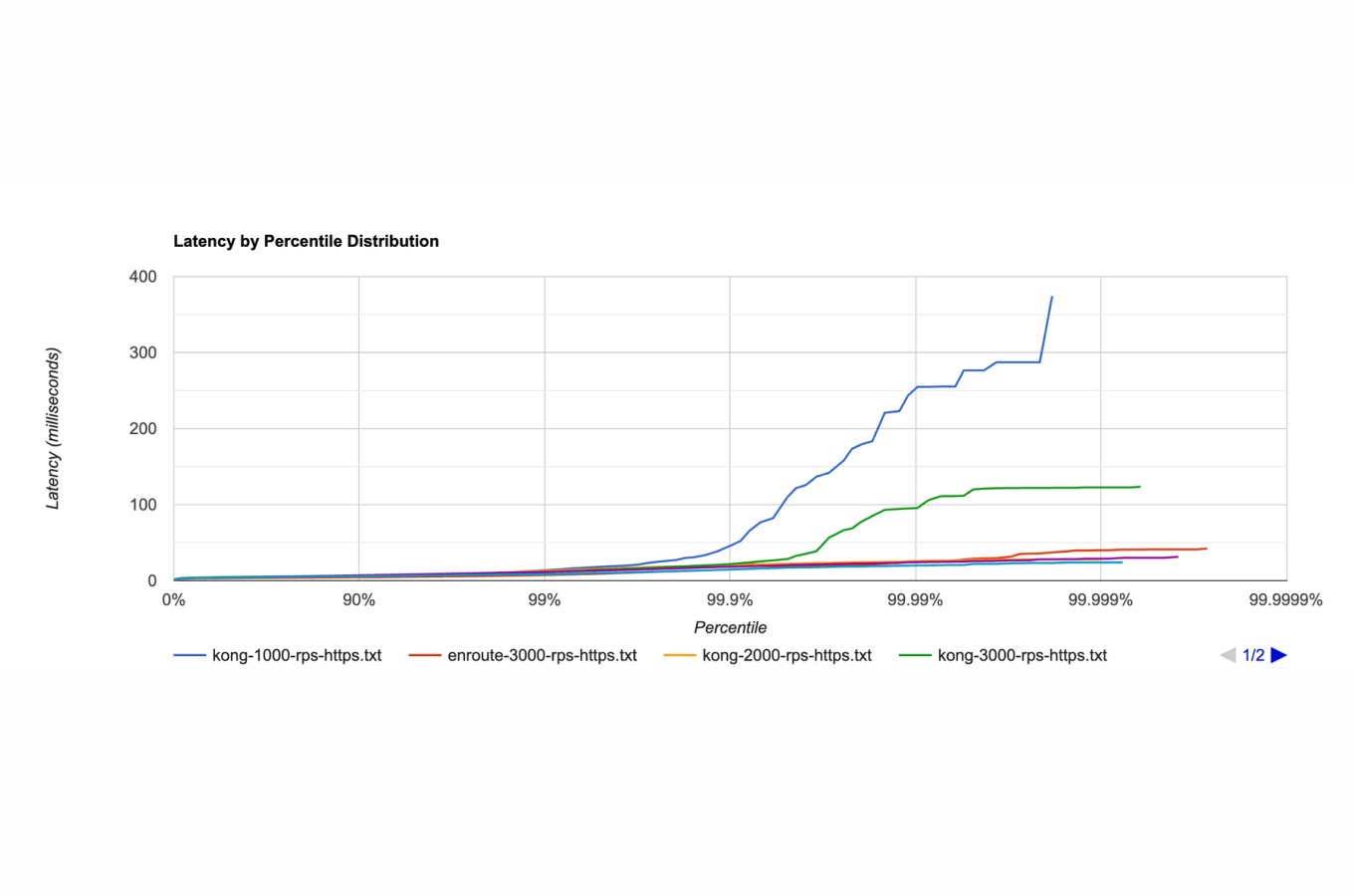

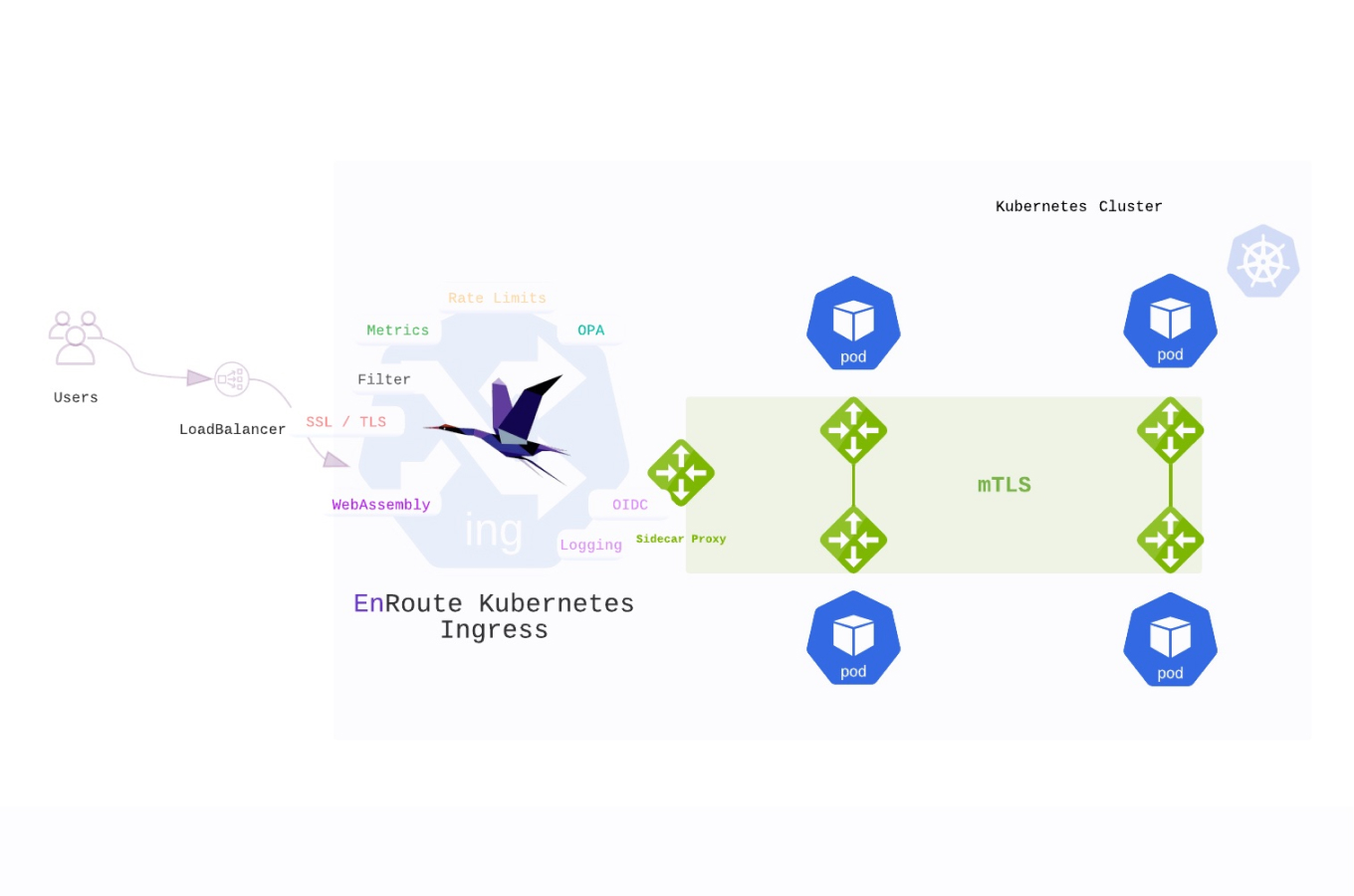

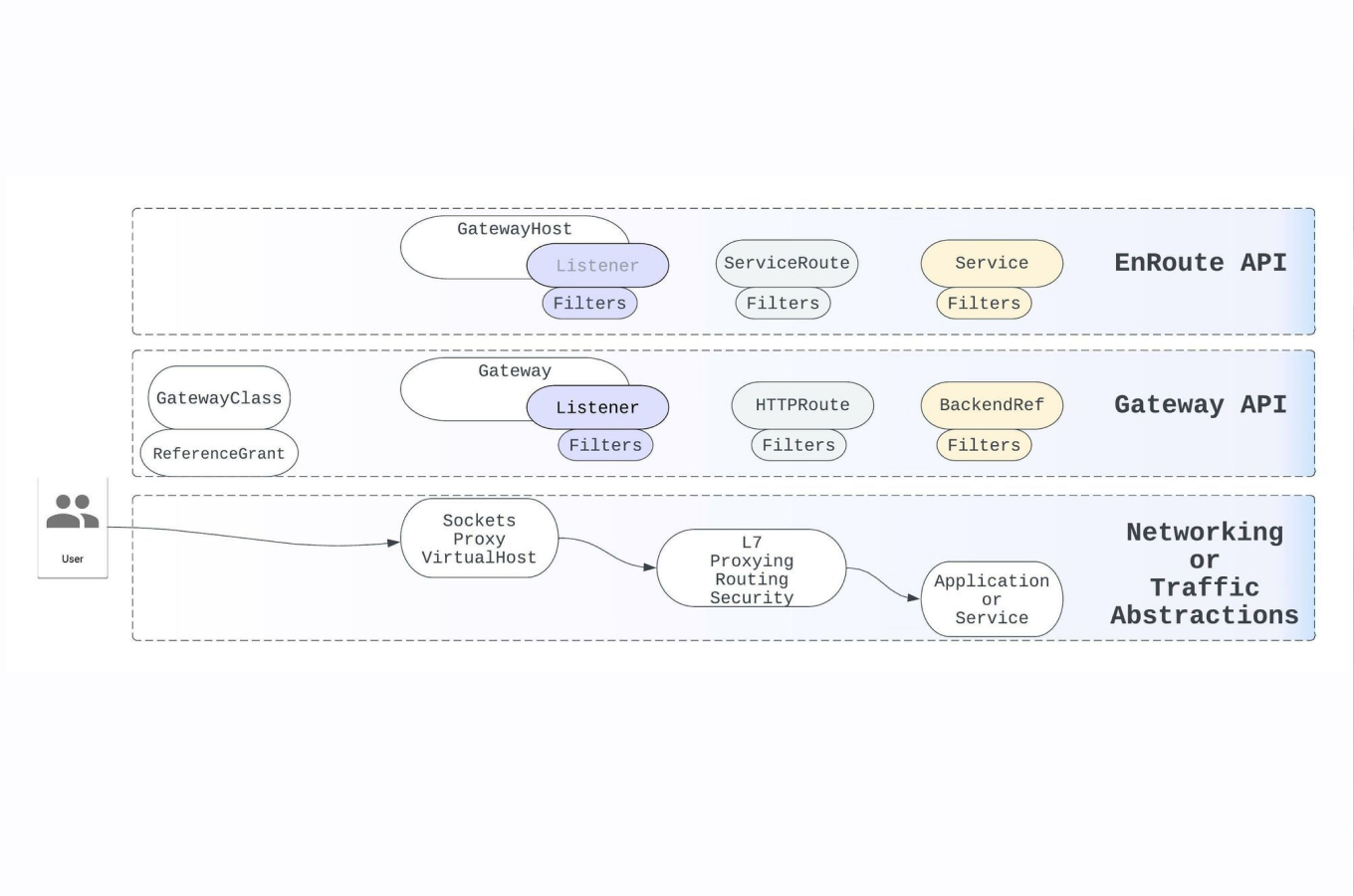

1. EnRoute

EnRoute provides a very rich implementation of rate limiting. Any L7 state can be combined to mix and match to perform rate-limit operations. For example, a user, the service they are accessing, a specific path, method, accessing an AI API etc. can all be achieved using EnRoute's extensible rate-limit mechanism.

2. Nginx

Nginx is one of the popular web servers that comes with leaky bucket rate limiting features. You can easily configure this feature with Nginx configuration file to control requests per IP address or specific time period.

that

3. Redis

Redis provides token bucket algorithm rate limiting with a distributed system. It allows distributed rate limiting across multiple servers in a distributed system to ensure users get the same limits regardless of which server they hit.

4. AWS API Gateway

Amazon allows developers to define rate limits in built-in configuration file. Developer can easily set custom rate limits based on their demand like per user, per day, or per month. There is no need to buy extra service from AWS for changing or updating this configuration file.

5. Kong Gateway

Kong API gateway supports both fixed window counter and sliding window rate limit methods. It can be implemented in a distributed system with backend services like Redis and others.

Rate Limiting For AI

When using APIs of Large Languge Models like OpenAI, there are rigid limits for API cals per minute and tokens per minute. These rate limits are enforced so as not to overwhelm the system due to high demand. This is also because model inference is computationally intensive. Additionally, inference using these models runs on GPUs, which are hard to come by.

Organizations running into this challenge typically use a mix of models with different rate-limits (both for number of API calls and the token rate), to control the request rate and also control costs. Additionally, the service tier (which is different for a free user v/s a paid user) is different and needs to be considered when enforcing rate limits.

The rate limit engine needs to support these use-cases to effectively enable Gen-AI use-cases.

How to Choose the Best Rate Limiting Method

When you choose the right rate limiter for your system you need to consider the following core points.

- Traffic Patterns: With steady traffic fixed window counter algorithm or sliding window algorithm may be appropriate in contrast to other algorithms. On the other hand in bursts request traffic token bucket algorithm is ideal to be implemented.

- System Load: When you are more concerned about overloading servers, you need to apply leaky bucket methods to ensure requests are processed at a steady rate and also prevent your system from overloading.

- User Experience: Sliding window algorithm is one of the best methods when you are thinking about public API service for its continuously updating request time windows.

- Endpoint Sensitivity: If some API endpoints are more resource-heavy than others, consider applying separate rate limits for each endpoint. This allows you to control usage more precisely and protect your most resource-intensive routes.

Conclusion

Rate limiting mechanism is an essential tool for system to handle a large traffic volume to provide smooth service to the users. It protects the system from DDos attacks, and brute force attacks and ensures fair distribution of resources as per user policy.